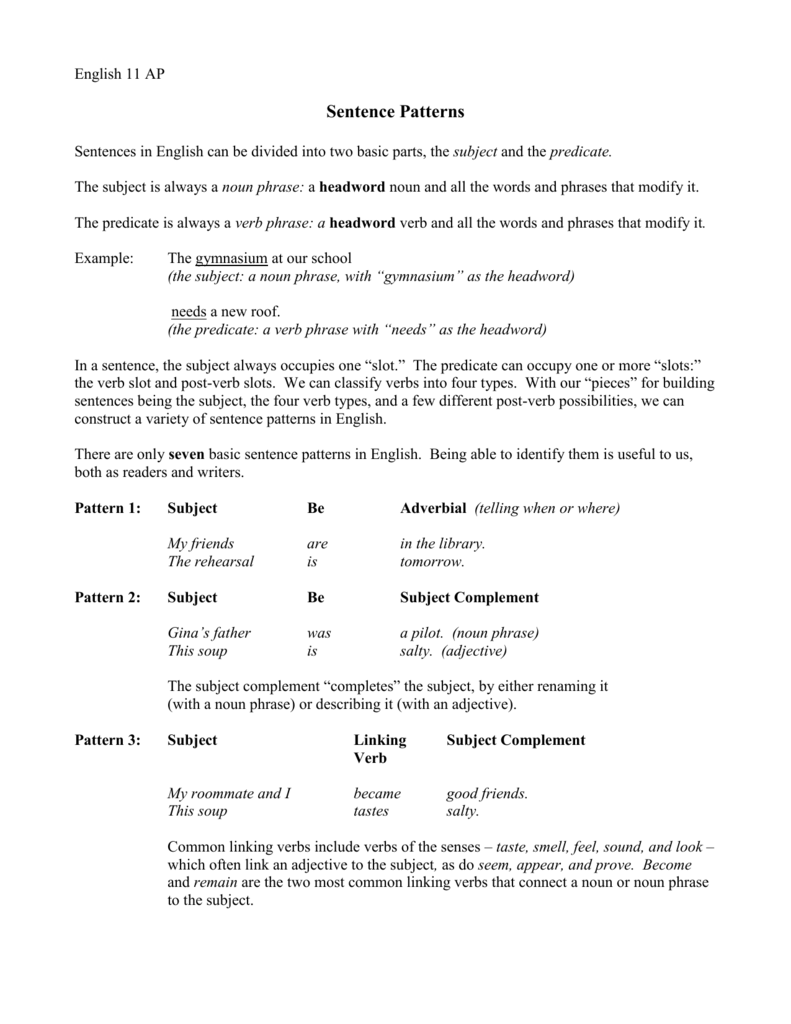

Slot Example Sentence

4/4/2022by admin

Slot Example Sentence Rating: 6,1/10 6065 votes

Coordination problems are at the root of some of the largest problems we have in society, like climate change.

Slot Sentence Example

What are coordination problems?

Coordination problems are the root cause of a lot of issues in society. Imagine each actor is a player in a game, and must choose a strategy based on the information available to them. Coordination problems are basically ‘games’ with multiple outcomes, so they have to decide how to act.

The prisoner’s dilemma

To learn how to beat slot machines, you need to first know how they work. Slots are amongst the most popular casino games throughout the world, both in land-based and online casinos. 33+1 sentence examples: 1. Visitors can book a time slot a week or more in advance. Have you secretly been lusting after their time slot? The programs have appeared periodically, in no set time slot. Subject complement (SUBJECT COMPLEMENT SLOT) - Sentence Type IV The truth was that the home team came back from a 30-point halftime deficit. The truth was SOMETHING. Direct object (DIRECT OBJECT SLOT) - Sentence Type V.

One example of this kind of problem is the prisoner's dilemma. Imagine you and an accomplice are arrested for murder. You are questioned at the same time, but in separate rooms, and you are not given a chance to talk to your friend before the interrogation. The twist is, there isn’t enough evidence for a murder conviction so the police want to sentence you both for robbery, or try to get one of you to testify against the other for murder. The policemen offer you both the exact same deal: you can i) stay silent or ii) screw over your friend and say he’s guilty of murder. If you both say stay silent then you both go to prison for just 1 year - we’ll call this option ‘cooperating’. If you both betray each other then you both get 5 years behind bars - we’ll call this ‘defecting’. If you stay silent and your friend betrays you then he gets off scot-free while you’re sentenced to life imprisonment, and vice versa.

If we want to have the best ‘overall outcome’, and we are certain that the other will also stay silent, then you might think that perhaps we would hypothetically cooperate and both serve 1 year. But if I’m certain that you will stay silent then I should logically decide to betray you and receive no punishment at all. If I did decide to stay silent, I would be exposed to the risk that you might rat me out, and I might have to spend my life in prison. So, the logical choice is for us both to defect - the options then would be i) I walk free or ii) I serve 5 years in prison.

When will the games end?

And we also often play an ‘iterated’ coordination games in society, where we play the same game over, which changes the benefits to you (payoffs) to be more rational to cooperate than defect (if you don’t know how many times you’ll interact with this person again).

Harvard psychologist Joshua Greene argues (video) that morals evolved as a way to enforce social norms and increase group cooperation.

Examples of coordination problems

Cooperative behavior of many animals can also be understood as an example of the prisoner's dilemma. Often animals engage in long term partnerships, which can be more specifically modeled as iterated prisoner's dilemma. For example, reciprocal food exchange (you feed me today and I’ll feed you tomorrow… or will I…?)

Examples of the prisoner’s dilemma include: countries individually benefitting economically from not limiting their carbon emissions, to everyone’s detriment; athletes using performance enhancing drugs to be more individually competitive, when all athletes would be worse off if everyone did it; etc.

Advertising is also cited as a real-example of the prisoner’s dilemma. When cigarette advertising was legal in the United States, competing cigarette manufacturers had to decide how much money to spend on advertising. Advertising doesn’t increase the number of smokers by as much as it does take customers from other manufacturers. In this sense, advertising is not cooperating, because if the firm wants to keep their customers, they’ll have to spend more on advertising as well, leading to a downward spiral. When cigarette advertisements were banned, profits actually increased (as explained in this video).

Monopolies increase prices because the firms involved can cooperate to keep them high. The more a market share is dispersed across different firms (measured by the concentration ratio), the less they can cooperate (i.e. one is more likely to defect and lower prices).

Coordination problems in preventing human extinction

Toby Ord — a Senior Research Fellow in Philosophy at Oxford University — has a new book The Precipice: Existential Risk and the Future of Humanity which discusses the coordination problems preventing human extinction:

Uncoordinated action by nation states therefore suffers from a collective action problem. Each nation is inadequately incentivised to take actions that reduce risk and to avoid actions that produce risk, preferring instead to free-ride on others. Because of this, we should expect risk-reducing activities to be under-supplied and risk-increasing activities to be over-supplied. This creates a need for international coordination on existential risk.

Time Slot Example Sentence

See where you can pre-order The Precipice here.

Also check out

Prisoner’s dilemma: Real life examples, Wikipedia

Free rider problem, Wikipedia

Game Theory, Coursera

Rhasspy is designed to recognize voice commands in a template language. These commands are categorized by intent, and may contain slots or named entities, such as the color and name of a light.

- Intent Recognition

- Slots

- Speech Recognition

sentences.ini

Voice commands stored in an ini file whose 'sections' are intents and 'values' are sentence templates.

Basic Syntax

To get started, simply list your intents (surround by brackets) and the possible ways of invoking them below:

If you say 'this is a sentence' after hitting the Train button, it will generate a TestIntent1.

Groups

You can group multiple words together using (parentheses) like:

Groups (sometimes called sequences) can be tagged and substituted like single words. They may also contain alternatives.

Optional Words

Within a sentence template, you can specify optional word(s) by surrounding them [with brackets]. For example:

will match:

an example sentence with some optional wordsexample sentence with some optional wordsan example sentence some optional wordsexample sentence some optional words

Alternatives

A set of items where only one is matched at a time is (specified like this). For N items, there will be N matched sentences (unless you nest optional words, etc.). The template:

will match:

set the light to redset the light to greenset the light to blue

Tags

Named entities are marked in your sentence templates with {tags}. The name of the {entity} is between the curly braces, while the (value of the){entity} comes immediately before:

With the {color} tag attached to (red green blue), Rhasspy will match:

set the light to [red](color)set the light to [green](color)set the light to [blue](color)

When the SetLightColor intent is recognized, the JSON event will contain a color property whose value is either 'red', 'green' or 'blue'.

Tag Synonyms

Tag/named entity values can be (substituted](#substitutions) using the colon (:) inside the {curly:braces} like:

Now the name property of the intent JSON event will contain 'light_1' instead of 'living room lamp'.

Substitutions

The colon (:) is used to put something different than what's spoken into the recognized intent JSON. The left-hand side of the : is what Rhasspy expects to hear, while the right-hand side is what gets put into the intent:

In this example, the spoken phrase 'living room lamp' will be replaced by 'light_1' in the recognized intent. Substitutions work for single words, groups, alternatives, and tags:

See tag synonyms for more details on tag substitution.

Lastly, you can leave the left-hand or right-hand side (or both!) of the : empty:

When the right-hand side is empty (dropped:), the spoken word will not appear in the intent. An empty left-hand side (:added) means the word is not spoken, but will appear in the intent.

The right-hand side of a substitution can also be a group:

Slot Example Sentences

which is equivalent to:

Leaving both sides empty does nothing unless you attach a tag it. This allows you to embed a named entity in a voice command without matching specific words:

An intent from the example above will contain a domain entity whose value is light.

Rules

Rules allow you to reuse parts of your sentence templates. They're defined by rule_name = ... alongside other sentences and referenced by <rule_name>. For example:

which is equivalent to:

You can share rules across intents by referencing them as <IntentName.rule_name> like:

The second intent (GetLightColor) references the colors rule from SetLightColor. Rule references without a dot must exist in the current intent.

Number Ranges

Rhasspy supports using number literals (75) and number ranges (1..10) directly in your sentence templates. During training, the num2words package is used to generate words that the speech recognizer can handle ('seventy five'). For example:

The brightness property of the recognized SetBrightness intent will automatically be converted to an integer for you. You can optionally add a step to the integer range:

Under the hood, number ranges are actually references to the rhasspy/numberslot program. You can override this behavior by creating your slot_programs/rhasspy/number program or disable it entirely by setting intent.replace_numbers to false in your profile.

Slots Lists

Large alternatives can become unwieldy quickly. For example, say you have a list of movie names:

Rather than keep this list in sentences.ini, you may put each movie name on a separate line in a file named slots/movies (no file extension) and reference it as $movies. Rhasspy automatically loads all files in the slots directory of your profile and makes them available as slots lists.

For the example above, the file slots/movies should contain:

Now you can simply use the placeholder $movies in your sentence templates:

When matched, the PlayMovie intent JSON will contain movie_name property with either 'Primer', 'Moon', etc.

Make sure to re-train Rhasspy whenever you update your slot values!

Slot Directories

Slot files can be put in sub-directories under slots. A list in slots/foo/bar should be referenced in sentences.ini as $foo/bar.

Slot Synonyms

Slot values are themselves sentence templates! So you can use all of the familiar syntax from above. Slot 'synonyms' can be created simply using substitutions. So a file named slots/rooms may contain:

which is referenced by $rooms and will match:

- the den

- den

- the playroom

- playroom

- the downstairs

- downstairs

This will always output just 'den' because [the:] optionally matches 'the' and then drops the word.

Slot Programs

Slot lists are great if your slot values always stay the same and are easily written out by hand. If you have slot values that you need to be generated each time Rhasspy is trained, you can use slot programs.

Create a directory named slot_programs in your profile (e.g., $HOME/.config/rhasspy/profiles/en/slot_programs):

Add a file in the slot_programs directory with the name of your slot, e.g. colors. Write a program in this file, such as a bash script. Make sure to include the shebang and mark the file as executable:

Now, when you reference $colors in your sentences.ini, Rhasspy will run the program you wrote and collect the slot values from each line. Note that you can output all the same things as regular slots lists, including optional words, alternatives, etc.

You can pass arguments to your program using the syntax $name,arg1,arg2,... in sentences.ini (no spaces). Arguments will be pass on the command-line, so arg1 and arg2 will be $1 and $2 in a bash script.

Like regular slots lists, slot programs can also be put in sub-directories under slot_programs. A program in slot_programs/foo/bar should be referenced in sentences.ini as $foo/bar.

Built-in Slots

Rhasspy includes a few built-in slots for each language:

$rhasspy/days- day names of the week$rhasspy/months- month names of the year

Converters

By default, all named entity values in a recognized intent's JSON are strings. If you need a different data type, such as an integer or float, or want to do some kind of complex conversion, use a converter:

The !name syntax calls a converter by name. Rhasspy includes several built-in converters:

- int - convert to integer

- float - convert to real

- bool - convert to boolean

Falsefor zero or 'false' (case insensitive)

- lower - lower-case

- upper - upper-case

You can define your own converters by placing a file in the converters directory of your profile. Like slot programs, this file should contain a shebang and be marked as executable (chmod +x). A file named converters/foo/bar should be referenced as !foo/bar in sentences.ini.

Your custom converter will receive the value to convert on standard in (stdin) encoded as JSON. You should print a converted JSON value to standard out stdout. The example below demonstrates converting a string value into an integer:

Converters can be chained, so !foo!bar will call the foo converter and then pass the result to bar.

Special Cases

If one of your sentences happens to start with an optional word (e.g., [the]), this can lead to a problem:

Python's configparser will interpret [the] as a new section header, which will produce a new intent, grammar, etc. Rhasspy handles this special case by using a backslash escape sequence ([):

Now [the] will be properly interpreted as a sentence under [SomeIntent]. You only need to escape a [ if it's the very first character in your sentence.

Motivation

The combination of an ini file and JSGF is arguably an abuse of two file formats, so why do this? At a minimum, Rhasspy needs a set of sentences grouped by intent in order to train the speech and intent recognizers. A fairly pleasant way to express this in text is as follows:

Compared to JSON, YAML, etc., there is minimal syntactic overhead for the purposes of just writing down sentences. However, its shortcomings become painfully obvious once you have more than a handful of sentences and intents:

- If two sentences are nearly identical, save for an optional word like 'the' or 'a', you have to maintain two nearly identical copies of a sentence.

- When speaking about collections of things, like colors or states (on/off), you need a sentence for every alternative choice.

- You cannot share commonly repeated phrases across sentences or intents.

- There is no way to tag phrases so the intent recognizer knows the values for an intent's slots (e.g., color).

Each of these shortcomings are addressed by considering the space between intent headings ([Intent 1], etc.) as a grammar that represent many possible voice commands. The possible sentences, stripped of their tags, are used as input to opengrm to produce a standard ARPA language model for pocketsphinx or Kaldi. The tagged sentences are then used to train an intent recognizer.

Custom Words

Rhasspy looks for words you've defined outside of your profile's base dictionary (typically base_dictionary.txt) in a custom words file (typically custom_words.txt). This is just a CMU phonetic dictionary with words/pronunciations separated by newlines:

You can use the Words tab in Rhasspy's web interface to generate this dictionary. During training, Rhasspy will merge custom_words.txt into your dictionary.txt file so the [speech to text](speech-to-text.md** system knows the words in your voice commands are pronounced.

Language Model Mixing

Rhasspy is designed to only respond to the voice commands you specify in sentences.ini, but both the Pocketsphinx and Kaldi speech to text systems are capable of transcribing open ended speech. While this will never be as good as a cloud-based system, Rhasspy offers it as an option.

A middle ground between open transcription and custom voice commands is language model mixing. During training, Rhasspy can mix a (large) pre-built language model with the custom-generated one. You specify a mixture weight (0-1), which controls how much of an influence the large language model has; a mixture weight of 0 makes Rhasspy sensitive only to your voice commands, which is the default.

To see the effect of language model mixing, consider a simple sentences.ini file:

This will only allow Rhasspy to understand the voice command 'turn on the living room lamp'. If we train Rhasspy and perform speech to text on a WAV file with this command, the output is no surprise:

Now let's do speech to text on a variation of the command, a WAV file with the speech 'would you please turn on the living room lamp':

The word salad here is because we're trying to recognize a voice command that was not present in sentences.ini. We could always add it, of course, and that is the preferred method for Rhasspy. There may be cases, however, where we cannot anticipate all of the variations of a voice command. For these cases, you should increase the mix_weight in your profile to something above 0:

Note that training will take significantly longer because of the size of the base language model. Now, let's test our two WAV files:

Great! Rhasspy was able to transcribe a sentence that it wasn't explicitly trained on. If you're trying this at home, you surely noticed that it takes a lot longer to process the WAV files too. In practice, it's not recommended to do mixed language modeling on lower-end hardware like a Raspberry Pi. If you need open ended speech recognition, try running Rhasspy in a client/server set up.

The Elephant in the Room

This isn't the end of the story for open ended speech recognition in Rhasspy, however, because Rhasspy also does intent recognition using the transcribed text as input. When the set of possible voice commands is known ahead of time, it's relatively easy to know what to do with each and every sentence. The flexibility gained from mixing in a base language model unfortunately places a large burden on the intent recognizer.

In our ChangeLightState example above, we're fortunate that everything works as expected:

But this works only because the default intent recognizer (fsticuffs) ignores unknown words by default, so 'would you please' is not interpreted. Changing 'lamp' to 'light' in the input sentence will reveal the problem:

This sentence would be impossible for the speech to text system to recognize without language model mixing, but it's quite possible now. We can band-aid over the problem a bit by switching to the fuzzywuzzy intent recognizer:

Now when we interpret the sentence with 'light' instead of 'lamp', we still get the expected output:

This works well for our toy example, but will not scale well when there are thousands of voice commands represented in sentences.ini or if the words used are significantly different than in the training set ('light' and 'lamp' are close enough for fuzzywuzzy).

A machine learning-based intent recognizer, like Rasa, would be a better choice for open ended speech.

Comments are closed.